How to A/B Test Your Rewarded Video Ad Monetization

Rewarded video ad monetization has become one of the most effective strategies for game developers and app publishers to generate revenue while maintaining positive user experiences. Unlike traditional interstitial or banner ads, rewarded video ads offer users tangible in-game benefits—such as extra lives, virtual currency, or premium content—in exchange for watching a video advertisement. This mutually beneficial exchange creates a win-win scenario: users receive valuable rewards, advertisers gain engaged viewers, and developers earn ad revenue.

However, implementing rewarded video ads isn’t a one-size-fits-all solution. What works brilliantly for one game might fall flat in another. This is where A/B testing becomes essential. A/B testing in the context of ad monetization involves creating controlled experiments to compare different variations of your rewarded video ad implementation, measuring their impact on key metrics like eCPM (effective cost per mille), ad revenue, and user retention. By systematically testing different approaches, you can identify the optimal configuration that maximizes both your monetization potential and user satisfaction. In this comprehensive guide, we’ll explore how to effectively A/B test your rewarded video ad monetization strategy to drive sustainable revenue growth.

Contents

- 1 Why A/B Testing Matters in Rewarded Video Ads

- 2 What You Can A/B Test in Rewarded Video Ads

- 3 Tools and Platforms for A/B Testing

- 4 Setting Up an A/B Test: Step-by-Step

- 5 Key Metrics to Track

- 6 Common Mistakes to Avoid

- 7 Final Thoughts & Best Practices

- 8 Start Optimizing Your Rewarded Video Ad Revenue Today

Why A/B Testing Matters in Rewarded Video Ads

Every game and application has its own unique ecosystem of user behavior, engagement patterns, and monetization dynamics. A monetization strategy that generates impressive results in a casual puzzle game might completely miss the mark in a competitive multiplayer title. Player demographics, session lengths, gameplay loops, and core mechanics all influence how users interact with reward advertisements.

This variability makes A/B testing absolutely critical for game monetization success. Rather than relying on industry best practices or educated guesses, A/B testing provides concrete, data-driven insights specific to your user base. By testing different ad monetization approaches, you can discover which configurations yield the highest conversion rates, optimal eCPM values, and best user engagement metrics.

The benefits extend beyond immediate revenue gains. Effective A/B testing helps you improve ARPDAU (Average Revenue Per Daily Active User), a crucial metric that directly impacts your game’s financial viability. When you understand exactly which reward values, ad placements, and frequency caps resonate with your players, you can fine-tune your entire monetization strategy to maximize LTV gaming metrics—the lifetime value each player generates over their entire relationship with your game.

Moreover, A/B testing helps you avoid costly mistakes. Without testing, you might implement changes that inadvertently harm user retention or engagement. By testing variations with a subset of your audience first, you can validate improvements before rolling them out universally, protecting your game’s health while optimizing revenue.

What You Can A/B Test in Rewarded Video Ads

The beauty of A/B testing rewarded video ads lies in the numerous variables you can experiment with. Each element of your implementation offers opportunities for optimization:

Placement Timing: The moment you present a rewarded video ad opportunity significantly impacts user acceptance rates. You can test showing ads before gameplay sessions begin, immediately after completing levels, during natural pause points, or after failed attempts. Some games find success with pre-level rewards that give players advantages, while others achieve better results with post-level rewards that feel like victory bonuses. Testing different timing strategies reveals when your users are most receptive to mobile game ads.

Ad Frequency: How often should users see rewarded ad opportunities? Too frequent, and you risk annoying players; too infrequent, and you leave money on the table. Test different frequency caps—perhaps allowing ads every 30 seconds versus every 5 minutes, or limiting users to 5 ads per session versus unlimited viewing. The optimal frequency balances revenue maximization with user experience preservation.

Reward Value: The incentive you offer dramatically affects ad completion rates. Testing low versus high rewards helps you find the sweet spot that motivates views without devaluing your in-game economy. For example, you might test offering 10 coins versus 25 coins, or 1 extra life versus 3 lives. Higher rewards typically increase ad engagement but can reduce the value perception of your virtual currency or decrease in-app purchase conversion.

Ad Provider and Network: Different ad networks deliver varying eCPM rates, fill rates, and ad quality. Testing AdMob rewarded ads against other providers, or implementing waterfall configurations with multiple demand sources, can significantly impact your rewarded ads revenue. Some networks excel in specific geographic regions or game genres, making testing essential for optimization.

Ad Creative and Format: While you don’t control the specific ads shown, you can test different ways of presenting the rewarded ad opportunity. Experiment with different button designs, messaging copy, or visual treatments. Test whether “Watch Video for 50 Coins” performs better than “Get Free Coins” or “Boost Your Progress.” These seemingly minor variations in your incentivized ads presentation can yield substantial differences in engagement.

Ad Cooldowns: After users watch a rewarded ad, how long should they wait before the next opportunity becomes available? Testing cooldown periods—whether immediate availability, 30-second waits, or 5-minute intervals—affects both revenue and user satisfaction. Shorter cooldowns can increase revenue but might feel spammy, while longer cooldowns might leave engaged users wanting more monetization opportunities.

User Segmentation: Not all users are created equal. Testing different approaches for new versus returning players, paying versus non-paying users, or high-engagement versus casual players allows you to tailor experiences that maximize value from each segment while maintaining appropriate user experiences across your entire player base.

Tools and Platforms for A/B Testing

Successfully running A/B tests for your rewarded video ad monetization requires the right technology stack. Fortunately, numerous monetization tools and platforms support sophisticated testing capabilities.

Remote configuration tools like Firebase A/B Testing provide robust frameworks for experimenting with different monetization parameters without requiring app updates. You can modify reward values, ad frequencies, and placement timing on the fly, quickly iterating based on real-time performance data. Firebase integrates seamlessly with Google Analytics, providing comprehensive visibility into how your tests impact user behavior and revenue metrics.

Dedicated ad monetization platforms offer built-in A/B testing features specifically designed for reward video advertising optimization. AppLixir, for instance, provides video monetization platform capabilities that allow you to segment users and test different configurations while tracking detailed performance metrics. Unity rewarded ads through Unity Monetization offer similar testing capabilities, with analytics tools that help you understand how different approaches affect player engagement and revenue generation.

For deeper analytical insights, specialized tools like GameAnalytics, Amplitude, or Mixpanel help you track user behavior patterns, segment audiences, and measure how ad monetization impacts broader engagement metrics. These platforms excel at revealing correlations between ad interactions and retention rates, session lengths, and lifetime value—insights that inform more strategic testing decisions. Many developers implement hybrid approaches, using a video monetization platform for ad serving and revenue tracking while leveraging separate analytics tools for comprehensive user behavior analysis. This combination provides both the specialized ad performance metrics and the broader context needed to make informed optimization decisions.

When selecting your monetization tools application stack, prioritize platforms that support granular user segmentation, real-time metric tracking, and easy configuration management. The ability to quickly launch tests, monitor performance, and roll back problematic changes makes the difference between effective optimization and frustrating experimentation.

Setting Up an A/B Test: Step-by-Step

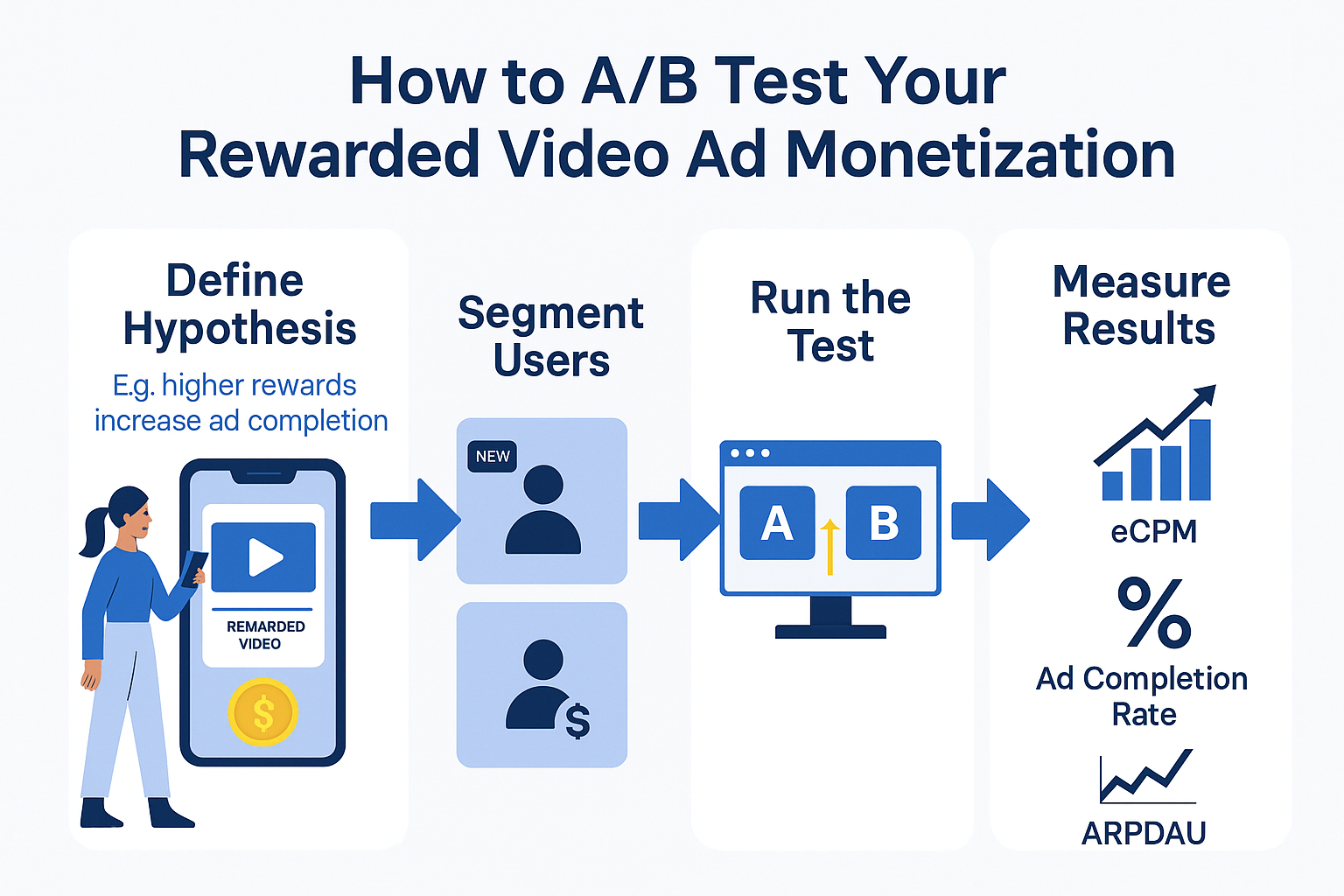

Implementing effective A/B tests requires methodical planning and execution. Follow this proven framework to maximize your learning and revenue impact:

Step 1: Define Your Hypothesis

Every test should begin with a clear, testable hypothesis. Rather than randomly changing variables, identify specific questions you want to answer. For example: “Will increasing the reward from 15 coins to 30 coins increase ad completion rates without harming in-app purchase conversion?” or “Does showing rewarded ads after failed levels generate more revenue than showing them after successful completions?” A well-formed hypothesis keeps your test focused and makes interpreting results straightforward.

Step 2: Segment Your Users

Divide your user base into meaningful test groups. Random assignment ensures statistical validity, but you might also create segments based on user characteristics. Consider separating new users (who are still learning your game) from veterans (who understand your economy). Distinguish paying customers (who might respond differently to free rewards) from non-paying players (who may rely more heavily on ad-based progression). This segmentation reveals whether different user types require different monetization strategies.

Step 3: Choose Your Variables

The golden rule of A/B testing: test one variable at a time. If you simultaneously change reward values, ad frequency, and placement timing, you won’t know which modification drove any observed changes. Isolating variables ensures clear cause-and-effect relationships. Create a control group experiencing your current implementation and one or more test groups with single-variable modifications. For more complex testing, consider multivariate approaches, but only after mastering single-variable experiments.

Step 4: Run the Test

Launch your test using your chosen platform’s A/B testing tools. Ensure your sample sizes are sufficient for statistical significance—small tests might show dramatic differences that disappear with larger audiences. Most experts recommend running tests until each variation has been experienced by at least several thousand users. Avoid making changes mid-test, as this invalidates your results. Set a predetermined test duration based on your traffic levels and weekly usage patterns to ensure you capture representative behavior across different times and days.

Step 5: Measure Results

Focus on metrics that matter for mobile game monetization success. Track eCPM to understand the revenue per thousand impressions. Monitor ARPDAU to see how the test affects average revenue across your entire daily active user base. Watch retention rates to ensure monetization changes aren’t driving users away. Calculate ad completion rates and reward redemption rates to understand engagement levels. Don’t just look at immediate revenue—consider how changes affect long-term metrics like LTV and session frequency.

Use an ad revenue calculator to project the long-term financial impact of successful variations. If a test shows a 10% increase in ARPDAU but a 5% decrease in day-7 retention, you need to model whether the short-term revenue gain compensates for reduced lifetime value.

Key Metrics to Track

Understanding which metrics to monitor separates successful monetization optimization from misguided experimentation. These essential measurements reveal the true impact of your A/B tests:

eCPM (Effective Cost Per Mille): This fundamental metric shows how much revenue you generate per thousand ad impressions. Higher eCPM indicates more valuable ad inventory. When testing different providers or formats, eCPM directly reflects which approach delivers better monetization. However, don’t optimize for eCPM alone—a high eCPM with low impression volume generates less total revenue than a moderate eCPM with high engagement.

Fill Rate: This percentage indicates how often ad networks successfully return an ad when requested. A 90% fill rate means 10% of ad requests go unfilled, representing lost revenue opportunities. Testing different ad providers or implementing waterfall configurations can improve fill rates. Low fill rates particularly impact mobile game advertising revenue in regions with less ad demand.

Ad Completion Rate: What percentage of users who begin watching rewarded video ads watch them to completion? This metric reflects both ad quality and reward value. If completion rates drop below 80%, either your ads are too long, too irrelevant, or your rewards aren’t compelling enough. Testing different reward values typically shows direct correlation with completion rates.

Reward Redemption Rate: How many users who receive rewards actually redeem them? High redemption rates indicate rewards are valued and integrated meaningfully into your game economy. Low redemption rates suggest either poor reward-gameplay integration or reward values that don’t justify the viewing effort.

ARPDAU (Average Revenue Per Daily Active User): This crucial metric divides total daily revenue by daily active users, showing the average value of each player. ARPDAU improvements indicate successful monetization optimization. When A/B testing, watch for ARPDAU changes across test segments—even small percentage improvements compound significantly over time.

Retention Rate: Track day-1, day-7, and day-30 retention rates to understand how monetization changes affect long-term engagement. Aggressive monetization might boost short-term revenue while destroying retention. Conversely, well-implemented rewarded video ads can actually improve retention by helping players progress through difficult sections.

LTV (Lifetime Value): The ultimate metric, LTV represents total revenue per user over their entire relationship with your game. Effective A/B testing optimizes for LTV, not just immediate revenue. A test that increases daily revenue by 20% but reduces average player lifetime from 90 days to 60 days might actually decrease overall value.

Monitoring these metrics through a comprehensive video monetization platform provides the visibility needed to make informed decisions about which test variations to implement permanently and which to abandon.

Common Mistakes to Avoid

Even experienced developers fall into predictable A/B testing traps. Avoid these common errors to ensure your monetization strategy tests generate reliable insights:

Testing Too Many Variables Simultaneously: When you change multiple elements at once—say, increasing reward values while also changing ad placement and frequency—you create confusion. If metrics improve, which change deserves credit? If they worsen, which change caused the damage? Resist the temptation to test everything at once. Systematic, single-variable testing takes longer but produces actionable insights that compound over time.

Insufficient Test Duration: Running tests for only a few days risks capturing anomalies rather than true patterns. Weekend behavior often differs dramatically from weekday engagement. Holiday periods show different patterns than normal weeks. New game updates temporarily change user behavior. Run tests for at least one full week, preferably two, to capture representative behavior across your user base’s normal patterns.

Ignoring Statistical Significance: A 5% ARPDAU improvement looks great, but is it real or random variation? Small sample sizes produce misleading results. Use statistical significance calculators to determine whether observed differences reflect genuine effects or random chance. Most experts recommend achieving 95% confidence before declaring test winners. Implementing changes based on statistically insignificant results wastes development resources and can actually harm performance.

Failing to Segment Users: Averaging results across your entire user base might hide important insights. Perhaps a reward increase dramatically improves monetization among non-paying users while slightly reducing in-app purchases among whales. Without segmentation, these opposing effects might cancel out, suggesting no net impact when reality shows significant segment-specific changes. Analyze results across meaningful user segments to understand nuanced impacts.

Changing Parameters Mid-Test: Halfway through a test, you notice one variation performing poorly and feel tempted to modify it. Don’t. Changing test parameters invalidates all data collected to that point. If a variation genuinely harms user experience or revenue, stop the test entirely, fix the problem, and start fresh with proper parameters. Otherwise, see tests through to completion.

Neglecting Long-Term Impact: A test showing 15% ARPDAU improvement looks fantastic—until you check retention rates three weeks later and discover a significant decline. Always monitor how monetization changes affect long-term engagement and LTV. Short-term revenue spikes mean little if they come at the expense of player lifetime and sustainable growth.

Avoiding these mistakes ensures your game monetization strategies evolve based on reliable data rather than misleading signals, building a stronger foundation for how to monetize HTML5 games and other platforms effectively.

Final Thoughts & Best Practices

Effective A/B testing isn’t a one-time project—it’s an ongoing commitment to data-driven monetization optimization. The mobile advertising platform landscape constantly evolves, with new ad formats, networks, and user preferences emerging regularly. What works today might become less effective tomorrow as players adapt and market conditions change.

Approach A/B testing as a continuous improvement cycle. After implementing winning variations, identify the next optimization opportunity. Always test with clear hypotheses and proper control groups rather than making arbitrary changes. This systematic approach compounds small improvements into significant revenue gains over time.

Remember that successful game monetization extends beyond pure revenue maximization. User experience matters tremendously. Rewarded video ads work precisely because they enhance rather than interrupt gameplay. Overly aggressive monetization, even if it temporarily boosts metrics, can destroy the player trust and engagement that makes sustainable revenue possible.

Balance your focus between immediate metrics and long-term health. Yes, watch ARPDAU and eCPM closely, but never lose sight of retention rates and LTV. The most successful monetization models for games generate strong revenue while keeping players happy, engaged, and returning day after day.

Integrate A/B testing into your broader monetization strategy rather than treating it as a separate activity. Use insights from rewarded video ad tests to inform decisions about in-app purchases, battle passes, and other revenue streams. Often, the lessons learned optimizing one monetization channel apply across your entire economy.

Finally, don’t fear failure. Not every test will yield positive results, and that’s perfectly fine. Tests that “fail” still provide valuable information—you’ve learned what doesn’t work, saving yourself from implementing harmful changes across your entire user base. Each test, whether successful or not, brings you closer to the optimal monetization configuration for your unique game and audience.

Start Optimizing Your Rewarded Video Ad Revenue Today

Ready to transform your reward video advertising performance? The insights and frameworks shared in this guide provide everything you need to begin systematic A/B testing of your rewarded video ad monetization.

Whether you’re just implementing your first rewarded ads or optimizing an established monetization strategy, A/B testing unlocks the data-driven insights needed to maximize revenue while maintaining excellent user experiences. The difference between guessing at optimal configurations and knowing with certainty can mean thousands or even millions of dollars in additional revenue over your game’s lifetime.

AppLixir’s video monetization platform makes implementing these testing strategies straightforward with built-in A/B testing features, comprehensive analytics, and optimization tools specifically designed for mobile and HTML5 game monetization. Our platform helps developers maximize video ads earning through intelligent ad serving, competitive eCPM rates, and seamless integration with popular game engines.

Ready to monetize games more effectively? Visit AppLixir to explore our rewarded video ads code examples, sign up for our SDK, and start optimizing your ad revenue today. Our team of monetization experts can help you implement testing strategies tailored to your specific game and audience, turning insights into revenue growth.